What is Split Testing in Email Marketing?

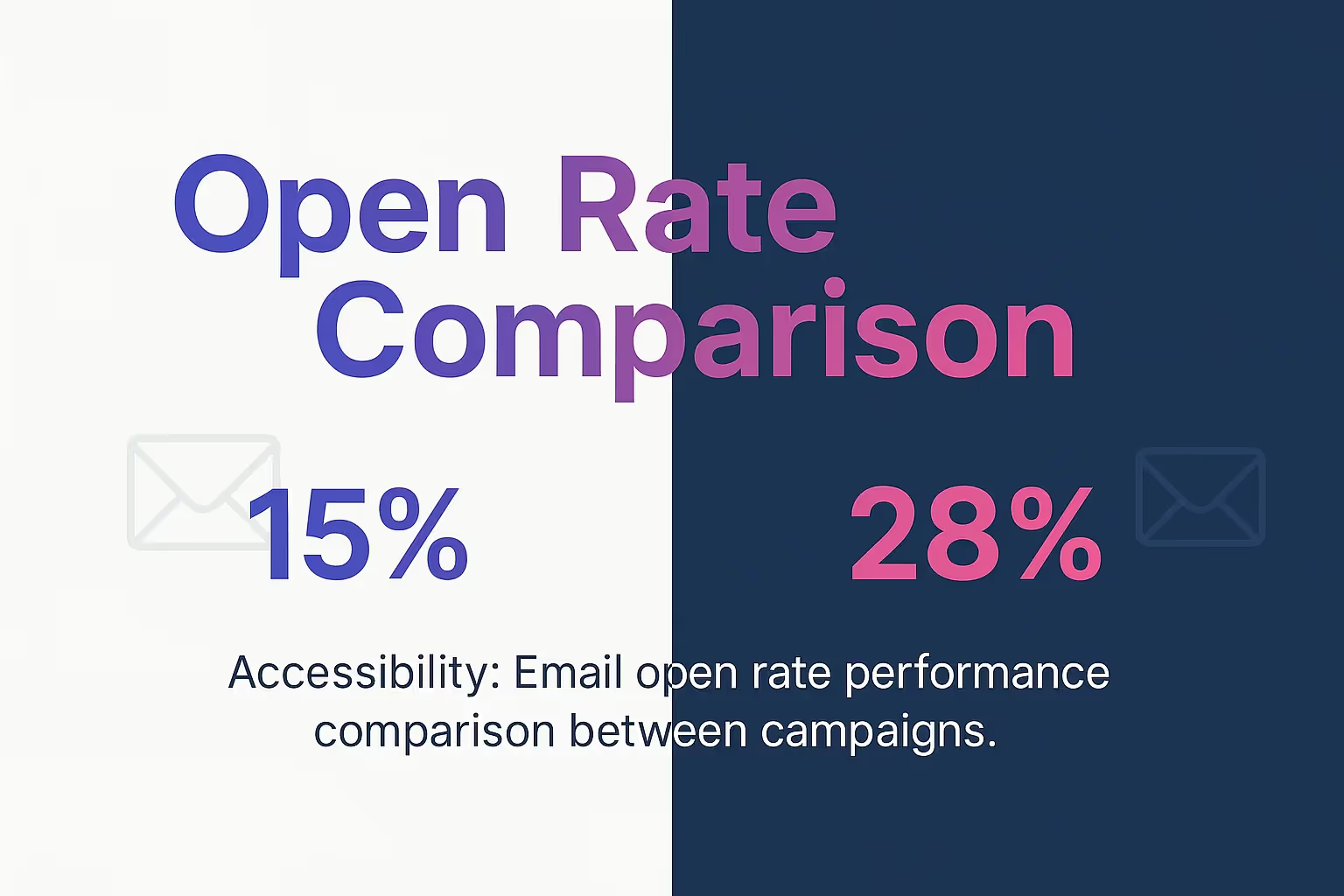

Your last email campaign got a 15% open rate. Your competitor's hit 28%. The difference? They test everything. You send and hope.

Split testing in email marketing means sending two or more versions of an email to different segments of your audience. Each version differs by one element like subject lines, content, or send times. You measure performance through open rates, clicks, and conversions to find what works best.

This method removes guesswork from your campaigns. Instead of wondering why emails underperform, you get concrete data about what drives engagement. Email marketing delivers an average ROI of $42 for every $1 spent, but only when you optimize based on real audience behavior.

We'll cover how split testing works, what elements to test, and proven strategies that turn mediocre campaigns into revenue drivers. You'll learn to make data-driven decisions that boost your email performance consistently.

Understanding Split Testing Fundamentals

Split testing, also called A/B testing, divides your email list into groups. Each group receives a different version of your message. The process involves creating variations with single variable changes to determine performance based on specific metrics.

The key principle is isolation. Change only one element per test. If you modify both the subject line and call-to-action button, you can't tell which change affected performance. This scientific approach ensures accurate results.

Core Components of Email Split Tests

Every split test needs four essential elements. First, you need a hypothesis about what might improve performance. Second, create variations that test this hypothesis. Third, randomly assign subscribers to each group. Fourth, measure results using predetermined metrics.

Your control group receives the original email version. Test groups get the variations. This method helps marketers make data-driven decisions to optimize campaigns for higher engagement and conversions.

Statistical Significance in Email Testing

Results matter only when statistically significant. This means your test reached enough people to trust the outcome. Small lists might need longer test periods. Large lists can show significant results quickly.

Most email platforms calculate significance automatically. Look for confidence levels above 95%. Lower confidence suggests you need more data before making decisions.

List SizeMinimum Test GroupExpected Timeline1,000-5,000200 per variation1-2 weeks5,000-20,000500 per variation3-7 days20,000+1,000 per variation1-3 days

Essential Elements to Test in Email Campaigns

Now that you understand the testing framework, focus on elements that impact performance most. Start with high-impact variables before testing minor details.

Subject Line Variations

Subject lines determine open rates more than any other factor. Test length, personalization, urgency, and curiosity gaps. Short subjects often work well on mobile devices. Longer subjects can provide more context.

Try these approaches: personalized vs generic, question vs statement, emoji vs text-only. Track which style resonates with your audience. Personalized subject lines typically increase open rates, but test to confirm with your specific list.

- Length variations (5 words vs 10+ words)

- Personalization levels (name, company, location)

- Emotional triggers (urgency, curiosity, benefit)

- Format styles (questions, statements, lists)

Send Time and Day Testing

Timing affects open and click rates significantly. Your audience might check email during lunch breaks, early mornings, or evening commutes. Industry averages provide starting points, but your audience is unique.

Test Tuesday vs Thursday sends. Compare 10 AM vs 2 PM vs 6 PM. Track performance across multiple sends to identify patterns. Timing strategies can dramatically improve campaign results.

Call-to-Action Optimization

Your CTA drives conversions. Test button colors, text, placement, and size. Action-oriented language often outperforms passive phrases. "Get Started Now" typically performs better than "Learn More."

Button placement matters too. Test CTAs above the fold vs bottom of email. Multiple CTAs vs single focus. The winning approach depends on your content length and audience behavior.

Implementing Your First Split Test Campaign

With testing elements identified, create your first split test campaign. Most email platforms like Mailchimp, GetResponse, or AWeber include built-in testing features.

Setting Up Test Parameters

Start with your email platform's split testing feature. Choose your test variable and create two versions. Set your test percentage—typically 10-20% of your list for each variation. The remaining subscribers wait for the winning version.

Define success metrics before sending. Focus on one primary goal: open rates for subject line tests, click rates for content tests, conversions for CTA tests. Secondary metrics provide context but shouldn't drive decisions.

Most platforms automatically send the winning version to remaining subscribers after a predetermined time. Set this window based on your typical engagement pattern—usually 2-24 hours.

Creating Effective Test Variations

Make meaningful changes between versions. Subtle tweaks rarely show significant differences. For subject line tests, try completely different approaches rather than minor word changes.

Test TypeVersion AVersion BSubject Line"New Product Launch""Launch Day: 50% Off Everything"CTA Button"Learn More""Get 50% Off Now"Send TimeTuesday 10 AMThursday 2 PMEmail LengthBrief 3-paragraph versionDetailed multi-section version

Analyzing Results and Taking Action

Wait for statistical significance before drawing conclusions. Premature analysis leads to false insights. Review both primary and secondary metrics to understand the full impact.

Document your findings in a testing log. Note winning elements, audience segments, and performance differences. Use these insights to inform future campaigns and testing strategies.

Implement winning elements in your standard email templates. But continue testing—audience preferences change over time. What works today might underperform next quarter.

Advanced Split Testing Strategies

Basic A/B tests provide valuable insights, but advanced strategies unlock deeper optimization opportunities. These techniques require larger lists and more sophisticated tracking.

Multivariate Testing Approaches

Multivariate tests examine multiple variables simultaneously. Instead of testing just subject lines, test subject lines AND send times together. This reveals interaction effects between variables.

You'll need larger subscriber lists for meaningful results. Each combination requires sufficient sample size. With three variables and two options each, you need eight test groups plus statistical significance requirements.

Use multivariate testing for major campaign launches or when you have consistent high-volume sends. Advanced list building helps reach the subscriber thresholds needed for complex tests.

Segmentation-Based Testing

Different audience segments respond to different messages. Test the same variations across customer segments, geographic regions, or engagement levels. New subscribers might prefer different content than long-term customers.

Create segment-specific test strategies. B2B audiences often respond to data-driven subject lines. B2C audiences might prefer emotional appeals. Test these assumptions with your actual segments rather than relying on generalizations.

- Identify your key segments (behavior, demographics, lifecycle stage)

- Create segment-specific test hypotheses

- Run parallel tests across segments

- Document segment preferences for future campaigns

Sequential Testing Programs

Build testing into your regular campaign schedule. Test one element per send rather than trying to optimize everything simultaneously. This systematic approach builds a knowledge base over time.

Month one: focus on subject lines. Month two: test send times. Month three: optimize CTAs. This progression lets you apply learnings from previous tests while discovering new insights.

Document results in a central location. Track winning elements, performance improvements, and audience insights. CTA optimization strategies can guide your testing sequence priorities.

Quick Answers to Common Questions

What is email split testing?

Email split testing is the process of sending two or more variations of an email campaign to different segments of your audience to determine which version performs better based on metrics like open rates or click-through rates.

What is an example of a split test?

A split test might involve sending two versions of an email with different subject lines to separate groups within your audience to see which subject line results in higher open rates.

What is the purpose of split testing?

The purpose is to identify which version of an email performs better by comparing user engagement or conversion rates, allowing marketers to make data-driven decisions that improve campaign effectiveness and ROI.

Split testing transforms email marketing from guesswork into science. Start with one element tests using your platform's built-in features. Focus on high-impact variables like subject lines and send times first.

Your first test might show a 5% improvement in open rates. Your tenth test might reveal a 30% boost in click-through rates. Each test builds knowledge about your audience preferences and behavior patterns.

Begin today with your next scheduled send. Pick one element to test, create two variations, and measure the results. Apply these insights to build more effective campaigns that drive real business results.

.svg)

.avif)